This product emerged from my Tangible Interfaces course at MIT Media Lab, led by Professor Hiroshi Ishii. This class is designed based on "Telepresence" – a concept sought to address the challenge of reconnecting individuals who are spatially and temporally apart through technology.

“By seeing, hearing, and feeling a physical object moves on its own in our hands, we imagine a person’s physical presence even though they are physically absent.”

I teamed up with three individuals with backgrounds in engineering and graphic design, and I led the efforts in concept ideation and interaction design. Through team discussion, we agreed that sound/music serves as a universal language with a low barrier to entry. This led us to explore the question:

For the final class deliverable, we presented a working prototype that enables users to collaboratively create music, along with a comprehensive presentation.

.gif)

To further develop our concept, I designed an iPad game that leverages the intuitive touchscreen interface, allowing users to interact with sound-making through playful actions.

“Telepresence” uses technology to bring people closer, no matter where they are. We wanted to make these connections feel more real by blending digital and physical elements. Since music is a universal way for people to connect, we decided to focus on creating a sound-making experience. To achieve our goal, we drew inspirations from a wide range of musical artifacts across different eras, which helped us shape and refine our concept.

To shape our concept, we reached out to 30 people with a survey to find out how they enjoy building relationships with others. The feedback was clear: most people are drawn to connecting through hands-on, tangible interactions. We explored different ideas, especially around haptics, sound, and collaboration. Before going too deep, we checked if others shared our excitement. The response was very positive — people loved the concept.

Inspired by their enthusiasm, we decided to create a collaborative music-making experience that brings friends together through creativity and connection, refining the key user problems to tackle.

Our design goal centers on finding innovative ways to use sound as a medium to bring individuals together, enhancing social bonds through shared musical experiences. We chose the shapes circle, triangle, and square, along with the colors red, blue, and yellow, as they are fundamental and universal, helping to lower entry barriers for users.

This scenario explores how people can engage in collaborative sound-making when they are in different geographical locations.

For individuals interested in music composition, this use case focuses on empowering users to create their own musical pieces independently.

This use case looks at scenarios where individuals in close proximity can collaborate on sound-making projects together.

Although we were interested in all three directions, we had to set aside the long-distance collaboration use case due to time constraints in the class, focusing instead on close-distance collaboration and the single-user experience.

We used physical objects and Python programming to create an interactive sound-making experience. We began by carefully selecting materials that allowed users to engage with sounds physically, turning sound creation into a hands-on activity. Using Python, we connected these tangible objects to digital processes, enabling real-time sound manipulation and interaction. Through continuous testing and refinement, we were able to craft a prototype where physical touch meets digital innovation.

.gif)

Looking back, teamwork was key to our success. Our final presentation received great feedback, reinforcing how much we achieved together. Even with tight deadlines, we tackled the first part of our challenge — connecting people in close proximity. With that milestone reached, I took on the next step myself: finding ways to connect people across long distances. It’s an exciting opportunity to push our idea even further and explore new possibilities!

While exploring long-distance collaboration, I realized many potential users weren’t familiar with music composition. To better understand what would engage them, I interviewed college friends about the platforms they enjoy. Many suggested an iPad game, which helped shape my direction. I also tested our class prototype with users, observing how they interacted and gathering feedback. From the user testings, I identified 3 key insights that would help guide my design.

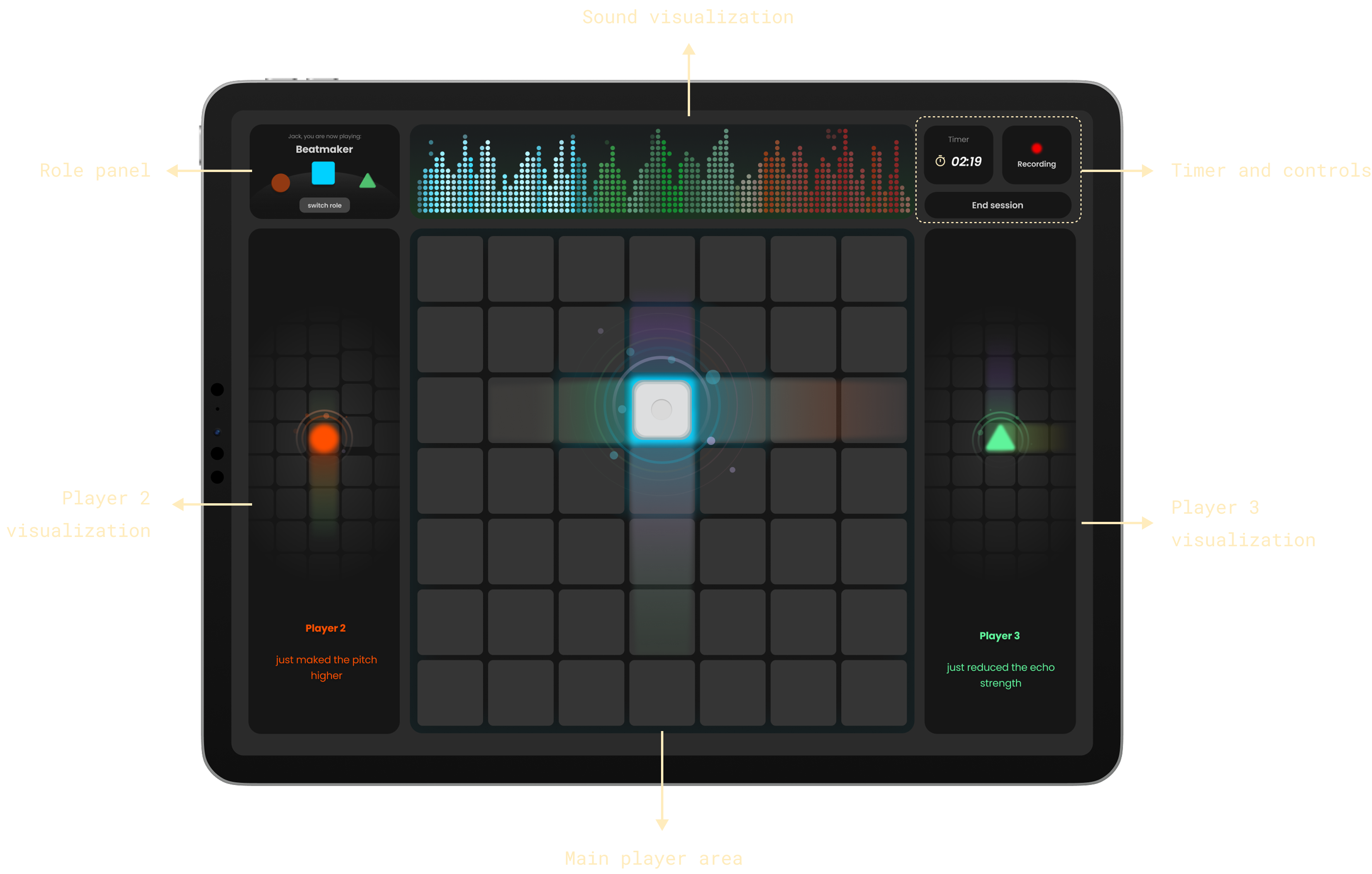

With the key insights I gathered from users, I began designing the game's interactions. After getting great feedback from users about the circle, triangle, and square shapes, I decided to keep them in my design. The idea of adding grid-based zones felt like a natural fit to enhance the overall experience.

Each shape interacts with grid-based zones on the iPad screen, triggering different behaviors and effects depending on where it is placed. This grid system allows users to explore a wide array of soundscapes and effects by simply moving the shapes across the screen. Each zone may represent a unique audio effect or musical element, prompting users to experiment with various combinations and arrangements.

The key of this game is the real-time collaboration between players. The goal is to make the game interactive and engaging, encouraging everyone to jump in, experiment, and create together.

I began working on a user flow diagram to map out the overall journey and interactions within the iPad game. This visual representation was crucial in helping me identify key screens and user touchpoints.

From here, users can start a new session or review past sound-making projects.

Users can invite others to join their session, with live status updates that keep everyone informed of who is joining and what changes are being made.

Users are guided with step-by-step instructions with expected next steps, so that they can be more familiar with the game interactions. They could also skip or review all the instructions afterward.

During game, players will move the physical shapes on the grids to adjust the sound while simultaneously seeing the movements of other players. The sound bar at the top visualizes the sound vibrations and players will hear the live music updates.

When players switch roles, they get to explore different musical perspectives. This usually happens during the game, so one player can send a request to switch, and the other player will get a notification and can decide whether to accept or pass on the request.

Once players agree to switch roles, they jump right back into the game with their new assignments. For those who aren't familiar with their new roles, we provide step-by-step guidance to help them transition smoothly, so the game keeps flowing without interruption.

Once the session ends, the project will automatically saved to the home dashboard. Players can export their composition in various formats for playback on different devices. They can also share their music on social media platforms.

Working with an amazing team brought a lot of excitement and learning. Each of us had different strengths, like engineering skills or an eye for design, and together we created something really special. It was a great lesson in how important it is to communicate openly, be flexible, and make the most of what everyone brings to the table. Our collaboration not only made the project better but also made working together a lot of fun.

This project helped me grow in surprising ways, especially in exploring design concepts more deeply. While working on the user flow and refining the prototype, I realized how crucial it is to look beyond the surface and really focus on the details. Each challenge turned into an opportunity to expand my understanding and skills, making me better equipped to meet user needs in thoughtful and creative ways.